👋 Hi, I’m Melissa and welcome to my biweekly Note, Language Processor. Every other Tuesday, I dig deep into language & behavior, the limits of technologies, and the connection between what people say and do. Once a month, Signal Lab takes over and covers these topics for startups and early-stage investors.

An increasing number of VC firms are touting themselves as “Quant VC”.

But is Quant VC an oxymoron?

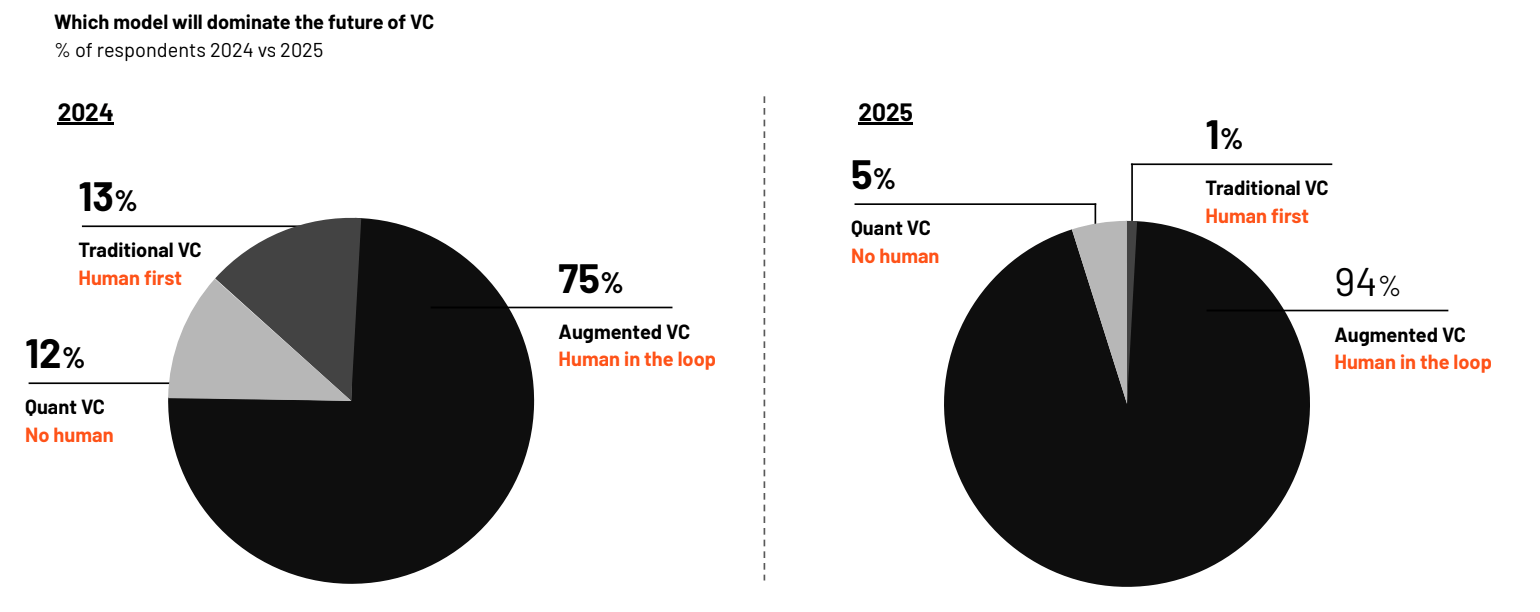

In 2024, VCs were bullish on both Quant VC and Traditional VC. In 2025, we saw that confidence drop, in both human-first and no-human approaches.

Source: DDVC 2025 Report

They’re right to be skeptical: developing a Quant model capable of extracting signal in startups – predicting success — is not just hard work, it might be impossible.

What even is Quant VC?

Many “Quant VCs” claim inspiration from RenTec. Renaissance Technologies, a hedge fund most famous for their Medallion Fund, averaged gross annual returns over a 30 year period of over 66%. Jim Simons, mathematician and codebreaker, founded RenTec to apply statistical analysis and quantitative models to systematize public markets trading. After Simons, the next two CEOs at RenTec were computer scientists specializing in computational linguistics.

Many VC firms use data science and quantitative models to inform their investment decisions, though these approaches differ significantly from the Quant trading in public markets practiced by firms like RenTec.

When most VCs say “quant”, they usually mean “augmented”.

Quant = automated, based on statistical signals. Algorithm-led, systematized investment decisions.

Augmented = human in the loop. Human-led investment decisions, supported by quantitative approaches and data.

In VC, data-driven quantitative approaches can be used to find deals, diligence deals, win deals, support deals, or balance portfolio for risk. In particular, AI tools at top of funnel screening for investment criteria (e.g., market size, growth projections, team profile) have grown increasingly popular among VCs. This is an inbound time saving and outbound generating engine, allowing VCs to allocate only to the most promising leads. It saves time, it reduces noise. But is it Quant? No. It’s a very basic process function.

Firms like Correlation Ventures, AngelList Early Stage Quant Fund, SignalFire, Two Sigma Ventures (spun out of the quant hedge fund), or self-proclaimed Quant VCs like QuantumLight and Moonfire Ventures use more sophisticated data-driven and quantitative techniques to automate due diligence, systematically score companies, and perform pattern recognition. The appeals of a quant approach are considerable, and indeed firms like Blue Moon (formerly 2.12 Ventures) have applied a “Quant Seed Fund process” to become a top 1% fund from their vintage, explicitly inspired by RenTec. But, still, is “Quant” the right term? What even is Quant?

Quant is rapid

In general, quantitative data science requires consistent long-term data, with enough out-of-sample to test hypotheses and generate statistical significance. Venture offers long-term data, but it gets stale. While stock trading (the home of Quant), generates massive quantities of new data points every day, venture data takes years to build out and test. Traditional quantitative trading uses that continuously refreshing new data for rapid, high-frequency trades (holding periods of hours or weeks) which allows for quick model validation and iteration. VC investments do not allow this, making it difficult to attribute eventual returns to a specific model or early signal. In fact, the feedback loop for evaluating the effectiveness of VC process frequently outlasts VCs themselves!

Quant requires mega data points

Quant requires vast quantities of data.

The magnitude of venture data is ill-fitted to a traditional Quant approach. Sure, 137,000 startups are launched every day (on average), but only 24,000 (or 1500?!) of those actually get funded.

When venture firms tout “We’ve analyzed more than 2 million data points!”, the question is – on how many startups? Rarely is it more than thousands. That’s not enough. Machine learning requires tens of thousands to hundreds of thousands of observations to find patterns in the data which reflect real world realities (more on that next).

Quant chokes on the power law

Most VCs are looking for unicorns, but – by definition -- those outcomes are a very small proportion of startups.

Unicorns comprise roughly 0.00006% of companies (3 in 5 million).

This power-law distribution, the backbone of venture capital by which one investment returns the entire fund, obstructs a Quant approach. Statistically, this is difficult to model systematically -- it makes the “outcome variable” hugely disproportionate in observations, a sampling bias error which can only be corrected with (you guessed it), more data!

But there is no more data on unicorns than there are unicorns, and they are slow to mint (150 new unicorns globally in 2024, about the same in 2025).

That’s not enough to stand up predictions using ML. At best, you’re drawing correlations or interactions, and you’re at a huge risk of overfitting in any case.

David Magerman, former quant at RenTec and now a VC at Differential Ventures, doesn’t call himself a quant now. For these reasons. He says, “We don’t do a lot of statistical modeling for our investment strategies because I don’t believe there’s a data set out there that would represent the right training data to even explore whether there’d be correlations, much less another test set that we could use that isn’t overfit.”

Now, a quant approach will work better for VCs optimizing for a pegasus (<$1BN exit) rather than a unicorn. This allows for more data to be used for correlations of success. But unicorns, no.

Quant is measurable

The factors that go into startup success are hard to measure, unreliable or opaque particularly in early stage. An unpleasant fact: factors which are difficult to measure are often measured incorrectly. In Quant, these errors can wash out because there is so much data that errors don’t skew conclusions – if you can impute missing data (i.e., fill in estimated fake values as part of the statistical process) you can handle some errors! But in a venture capital model, errors loom larger because the datasets are smaller. And financial data has already demonstrated high vulnerability – as high as 75% — to errors in automated text analysis. Errors on errors!

Public markets generate huge amounts of structured, real-time data ideal for complex algorithms. In VC, more data is unstructured, less frequent, often private. On top of that, there is no guarantee that measures are standardized or comparable; there are fewer regulations, offering more flexibility in reporting. For instance, did ARR include only signed contracts or contracts in process of closing? Does the number of investors include friends and family and angel rounds or just institutionals? And a big one -- how to measure qualitative factors like team dynamics, market vision, and relationships, which are harder to quantify?

There’s better data in later-stage venture – more detailed, audited, verified (but still not public, so there is a trust risk based on source), but at any stage, building a true quant model is a nontrivial resource spend (to say the least). And David Magerman, for one, isn’t convinced that it’s justifiable given the methodology cannot yield significance in real world prediction for VC.

Augmentation: the way forward

A subscription to one of many quantitative tools (e.g., Harmonic, Synaptic, Specter) does not a Quant VC make. Even Boardy, a venture AI, is neither a Quant nor autonomous.

But, most VCs – especially ones with established and studied processes – do not need full blown ML models and “Quant VC” at all. A few simple questions about exit, team, and investors will often do more — and more reliably — than a sophisticated model. It’s a dirty secret that binary outcome predictors, across domains and decades, outperform sophisticated models, especially when data is limited or overly complex. Don’t overthink it! Simplify your data architecture. Even RenTec, says David Magerman, was successful at deploying statistical models, but in a “much more limited way than people give them credit for.”

Magerman himself suggests an augmented approach: “You can’t trust statistical significance measures and traditional metrics of doing the kind of machine or data science you typically do, it’s more a matter of trying to maximize the value of your data – looking at factor models, trying to extract features that are correlated with success and then deciding for yourself whether you think that those features’ correlation is indicative of causality or just correlation or coincidence.” Very human in the loop, treating the model output as directional, not absolute.

To put it bluntly, when “Quant VC” claims to use Quant to predict success or failure of a company, it is overfitting. Overfitting is invalid. In other words, this is not supported by the data model in venture — it’s snake oil!

Instead, an augmented approach uses data deviation indications to characterize, challenge intuition and surface new insights, as a thought partner in decision-making. This is the best use of quantitative techniques. Data complementing rather than completely replacing the investment decision process.

And that works.

A final thought — Quant VC disrupts itself. Especially at the early stage, “Quant VCs” are automating themselves out of a privileged position, one they attained by virtue of their professed ability to pick winners in qualitative, low signal environments. To shift the process is to shift the value proposition, and what are LPs meant to think of that?

Is Quant VC the snake eating its tail?

See you in two weeks.